Is it discriminatory to target digital ads based on a person's gender, age, or income? The United States Department of Housing and Urban Development says, "yes." Here's how Facebook's ads discriminate against protected classes.

A story broke on NPR at the end of March about the Department of Housing and Urban Development (HUD) filing a lawsuit against Facebook. HUD’s lawsuit claims that Facebook’s ad platform is discriminatory on the grounds of race, color, religion, sex, familial status, origin, and disability.

How does Facebook’s ad platform work?

Imagine you’re a landlord with a vacancy at one of your properties that are located in a secluded, predominantly white, English-speaking neighborhood which is home to families with college-age children.

To fill your vacancy, you decide to put an ad in your local paper, post it on Craigslist, and choose to advertise on Facebook. So you go to Facebook Business Manager, select the click-based objective and start to build your audience.

As recent as March 19th, you were able to target housing ads specifically based on gender or age. Advertisers also have the options to target audiences by language, income, and location, among many others.

In this situation the landlord says, I only want to show this rental property to men and women who between 35 and 50-years-old, in the top 10% of income earners, and are within 25 miles of the property. He also wants to show the ad to anyone who has visited the business Facebook page or Instagram profile using remarketing ads. Let’s say this targeting approach leaves the advertiser with a pool of 100,000 “qualified” people.

How does Facebook’s ad delivery algorithm work?

Facebook’s ad delivery algorithm tries to get advertisers the best result based on their objective.

Facebook takes your qualified pool of 100,000 people and factors in historical data like the performance of other housing ads, pages visited, posts liked, people they're friends with, or events attended. From there, it calculates who is most likely to engage with the advertisement.

Because the advertiser has a limited budget, let’s say $1,000, they can’t reach all 100,000 people. Therefore, the advertiser puts up the first fence saying, "these 100,000 people can see my ad," then Facebook’s ad delivery algorithm puts up a second fence of people who are the most likely to engage with the ad. Then, Facebook only delivers your ad to the individuals who are most likely to click the ad.

So even though the advertiser doesn’t explicitly exclude a person based on some of the protected classes, Facebook's algorithm has access to far more information it can use to prejudicially deliver the advertisement.

Herein lies the problem.

Even if the landlord was open to any person, any age, any income, from anywhere, Facebook's algorithm would still prejudicially determine who sees the ad based on historical information and the known behaviors.

For instance, from Facebook's perspective, those who speak English as a second language aren't as likely to click an ad, click through to a website, and fill out a form to visit the property as an English speaker, and therefore gives them less chance of seeing the ad.

So the Department of Housing and Urban Development is saying that for housing, jobs, and lending, Facebook is discriminatory because they are choosing who to deliver ads to via their algorithm.

Let's take a closer look with two of our top strategists

Robert Johns: So by nature of the targeting that they give to their advertisers and the targeting they do themselves, HUD is claiming that Facebook is discriminatory.

However, by definition, every advertiser discriminates in some way or another.

For example, Louis Vuitton is not going to market their handbags to people who don’t make enough money to afford their bags. That's not a smart use of money. So to say that discrimination is always wrong, I think it is incorrect. However, to discriminate against people of a specific ethnicity, races, backgrounds, is an issue.

Brady: The critical distinction here is the difference between advertising a product and advertising housing.

Housing, jobs, and lending have federal laws in place to protect against discrimination on protected classes such as race, gender, orientation, religion, etc. Many of us know about these protective classes if we’ve ever read our employee handbook. The same is true for housing.

There are a few ways that we can see this play out

In the wake of the Cambridge Analytica scandal during the 2016 Presidential Election, Facebook has made sweeping changes to their ad platform including removing manipulative targeting methods and their Partner Categories feature.

On March 19th, Facebook announced it has taken further steps to protect against discrimination in housing ads. You can no longer target by age, gender, or some other targeting options. Perhaps this will make an advertiser work that much harder, or find other ways to distribute its ad buy.

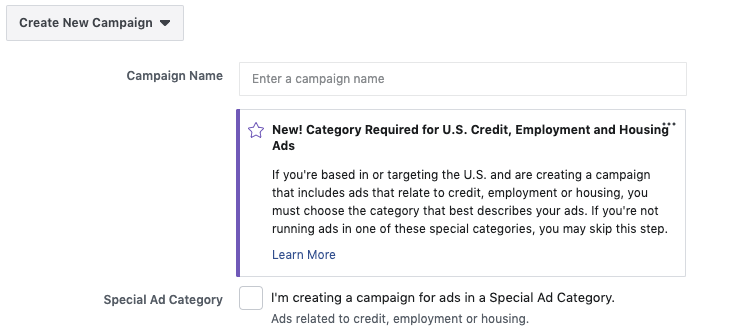

As of today, there is a new "Special Ad Category" for advertisers intending to "target audiences in the US and want to create an ad campaign that relates to credit, employment or housing." When advertisers select this option, Facebook restricts certain targeting options and Lookalike Audiences.

Surprisingly or not, HUD went forward with their lawsuit anyway, basically saying to Facebook, “Hey, we don't think that you're going far enough.”

What does this lawsuit mean for other advertising platforms?

HUD is investigating Twitter and Google for the same reasons. HUD reached out to these platforms to make sure that their algorithms are not playing into these discriminatory biases.

This idea of discriminatory algorithms that reinforce biases comes up a lot actually. Search algorithms are designed to return the most relevant results, and social algorithms like Facebook, Instagram and Reddit are designed to keep users engaged by showing them more of what they are interested in.

By their very nature, algorithms are perpetuating biases and taking people further down the rabbit hole. Most noticeably, Reddit has been singled out for hate speech. It’s a dangerous thing.

Or take Instagram for example. You watch one funny dog video and then for the next month there's funny dog videos in your discover feed.

Robert Johns: But there's not a little man behind the curtain like in The Wizard of Oz who is selectively saying, “don't show this housing ad to this person.” It's a program, working as designed, based on human inputs. It's not explicitly discriminatory.

Brady: Correct. Facebook’s ad delivery algorithm is a giant equation that says, factor in everything we know about these people and spit out a number, and the higher the number is, the more likely that person is likely to engage with the advertisement.

Based on what an advertiser is willing to spend and the likelihood of someone engaging, Facebook decides who sees the ad. Facebook's ad delivery algorithm is discriminatory.

Robert: So there’s a difference between buying a product and buying a house. How did Facebook get into this position? Did they not realize or did they not care?

Brady: I'm not sure how they got into this situation. Facebook’s bottom line comes from the data they collect and sell back to advertisers. So whether it was intentional or an “oversight,” selling this information to advertisers is how Facebook pays its stockholders.

I think it's more of an “ask for forgiveness rather than permission” kind of scenario. I personally think that Facebook will keep doing as it pleases until someone calls them out, and as things come to light about people manipulating the platform or being discriminatory, Facebook makes those changes.

Look, there was a period when you could promote cryptocurrency and crypto assets legitimately on Facebook. And then as different initial coin offerings (ICOs) drew attention and people were getting ripped off, Facebook updated their advertising policy and banned advertising cryptocurrencies.

And now too with housing, lending, and federally regulated industries, Facebook is playing catch up. Right now, HUD’s lawsuit is asking Facebook to acknowledge that their targeting methods and their algorithm are discriminatory, and take steps towards making it less discriminatory.

I foresee Facebook placing limits on what can be advertise, what can be used to target people, and depending on how the lawsuit plays out, they might need to update their algorithm.

And the saga continues...

Since the original story broke, Facebook updated their ad preferences to allow Facebook users to control exactly which brands, pages, and interests are allowed to be tracked.

Navigating the world of digital advertising can be complicated. Let us help.